Document Versioning in Couchbase

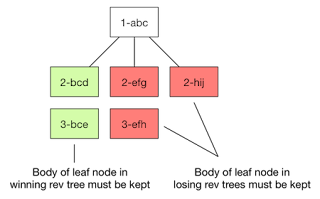

Couchbase Server does out of the box not support document revisions but it would be quite simple to implement it on the application side. This article describes ways how to do this. The following topics are covered: Handling concurrent access Relevant attributes One document per version Embedded revision tree Combined approaches Handling concurrent access In a context of versioning multiple users/threads are creating new versions (and this maybe nearly the same time). So I think it makes sense to spot a light on concurrent access before we talk about versioning approaches. You will most probably need to combine concurrency handling with versioning. Couchbase supports 2 ways of handling concurrent access to the same document. C(ompare) A(nd) S(wap): This is the optimistic approach. Each document has a built-in property which is the CAS-value. The CAS-value changes as soon as somebody updates the document. So the idea is to implement something like the following on th